Envision and Meta Partner to Revolutionize Accessibility with AI

At Envision, our mission has always been to make information and knowledge easily accessible to everyone—especially those who are blind, have low vision, cognitive disabilities, are elderly, or have low literacy. We are thrilled to announce an exciting new partnership with Meta that combines the power of advanced AI and wearable technology to push the boundaries of assistive tools. Together, we’re pioneering new solutions that empower individuals to navigate the world independently, with dignity and confidence.

Introducing ‘ally’: Your Personal Accessibility Assistant

A major highlight of our partnership is ‘ally’, a conversational personal AI assistant designed specifically for accessibility. Powered by Llama 3.1, Meta’s advanced open-source AI model, ‘ally’ helps blind and low-vision users easily access the information they need in their daily lives.

How does ally work?

ally uses Llama in two key ways:

1. Intent Recognition:

ally leverages Llama’s language processing to understand the intent behind a user’s question. For instance, when you ask about the weather, ally knows it needs to get that information from somewhere else—just like if you asked someone to check the weather for you. ally then goes to a “weather helper” (which is the weather API) and asks for the current weather. The “helper” sends back the info, and ally tells you the answer. If the user needs help reading a document, ally processes the text via OCR (Optical Character Recognition). By understanding the user’s intent, ally ensures that each query is directed to the right AI model, delivering accurate and timely responses.

2. Text and Visual Processing:

Llama also powers ally’s ability to process and summarize text. For visual tasks, ally uses a fine-tuned version of Llava, a derivative of Llama that specializes in multimodal tasks like interpreting images. Whether it’s describing a scene or recommending a dish from a restaurant menu, ally brings precise and contextual answers to its users, making it an indispensable everyday assistant.

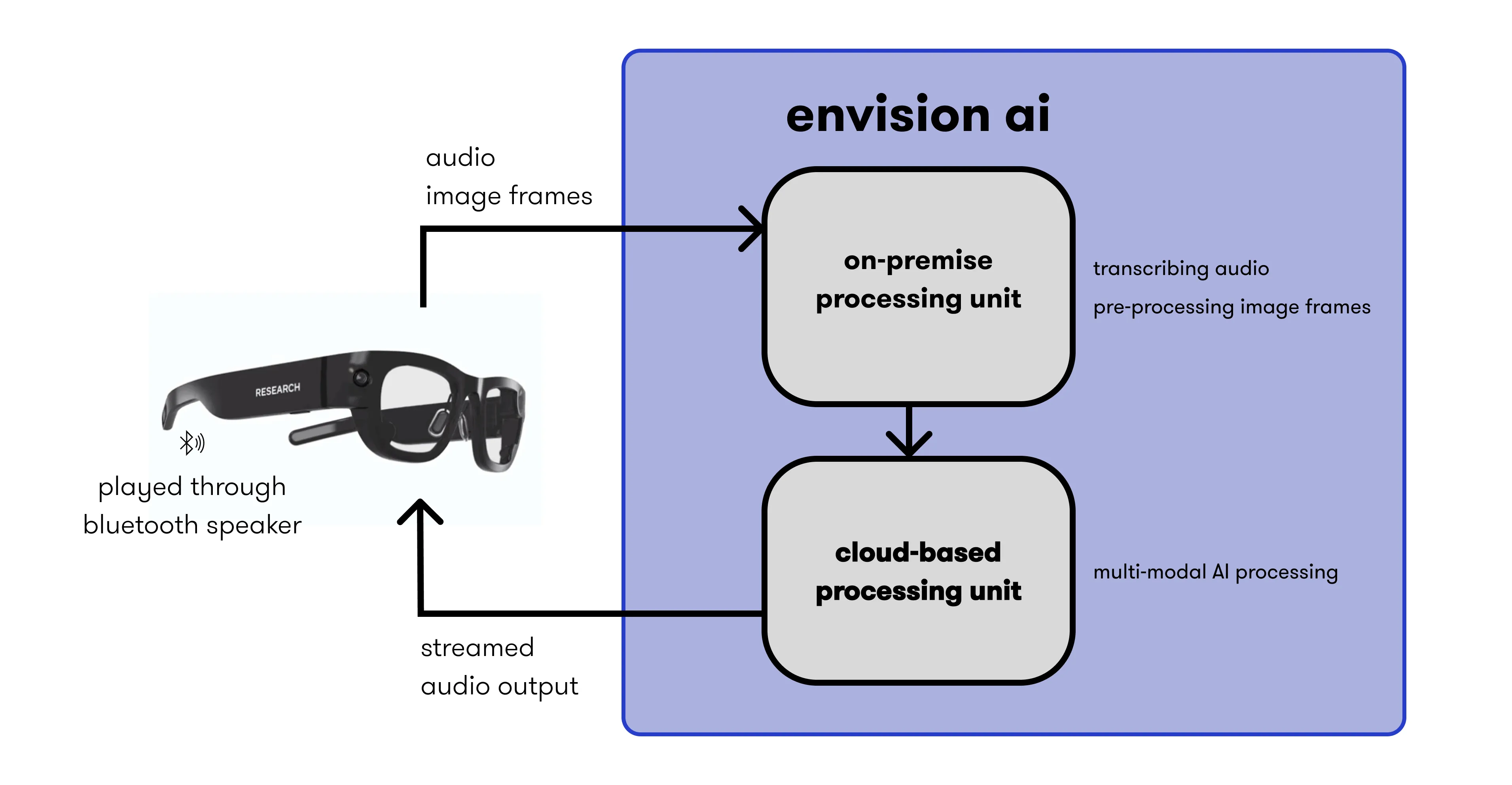

Project Aria and Wearable AI

In addition to ally, our partnership with Meta involves a groundbreaking prototype on Meta’s Project Aria glasses. By integrating Llama’s language processing and computer vision, these glasses allow users to access visual information through speech. This innovation brings us closer to a world where individuals who are blind or have low vision can independently interact with their surroundings using wearable AI technology.

Meta Connect and Envision’s LLama Poster Presentation

As part of this exciting collaboration, Envision will be presenting a technical poster at Meta Connect on September 25th, where we’ll dive deeper into how we’re using Llama to enhance accessibility for our users. The poster focuses on:

- Inference Advantages at Scale: How Llama’s open-source nature allows for cost-effective, scalable solutions with high-speed inference, making it ideal for assistive technology like ours.

- Function Calling: Llama’s ability to simplify complex workflows by routing queries to the appropriate AI tasks, streamlining the user experience.

- Multimodal Capabilities: Envision’s fine-tuning of Llama, integrating vision and language processing to power smart glasses.

Our poster will also compare Llama’s performance to other leading AI models, demonstrating its efficiency in real-world tasks for over 500,000 blind and low-vision users across 200 countries. This presentation will highlight our collaborative effort with Meta and showcase how open-source AI can be a transformative tool for accessibility.

A New Era of Independence

Envision’s partnership with Meta marks a significant leap forward in creating accessible, AI-driven solutions that empower users to engage with the world around them. As we present our findings at Meta Connect, we remain committed to our mission: making information and knowledge accessible to everyone, regardless of their abilities.

%201.svg)